User-Elicited Surface and Motion Gestures for Object Manipulation in Mobile Augmented Reality

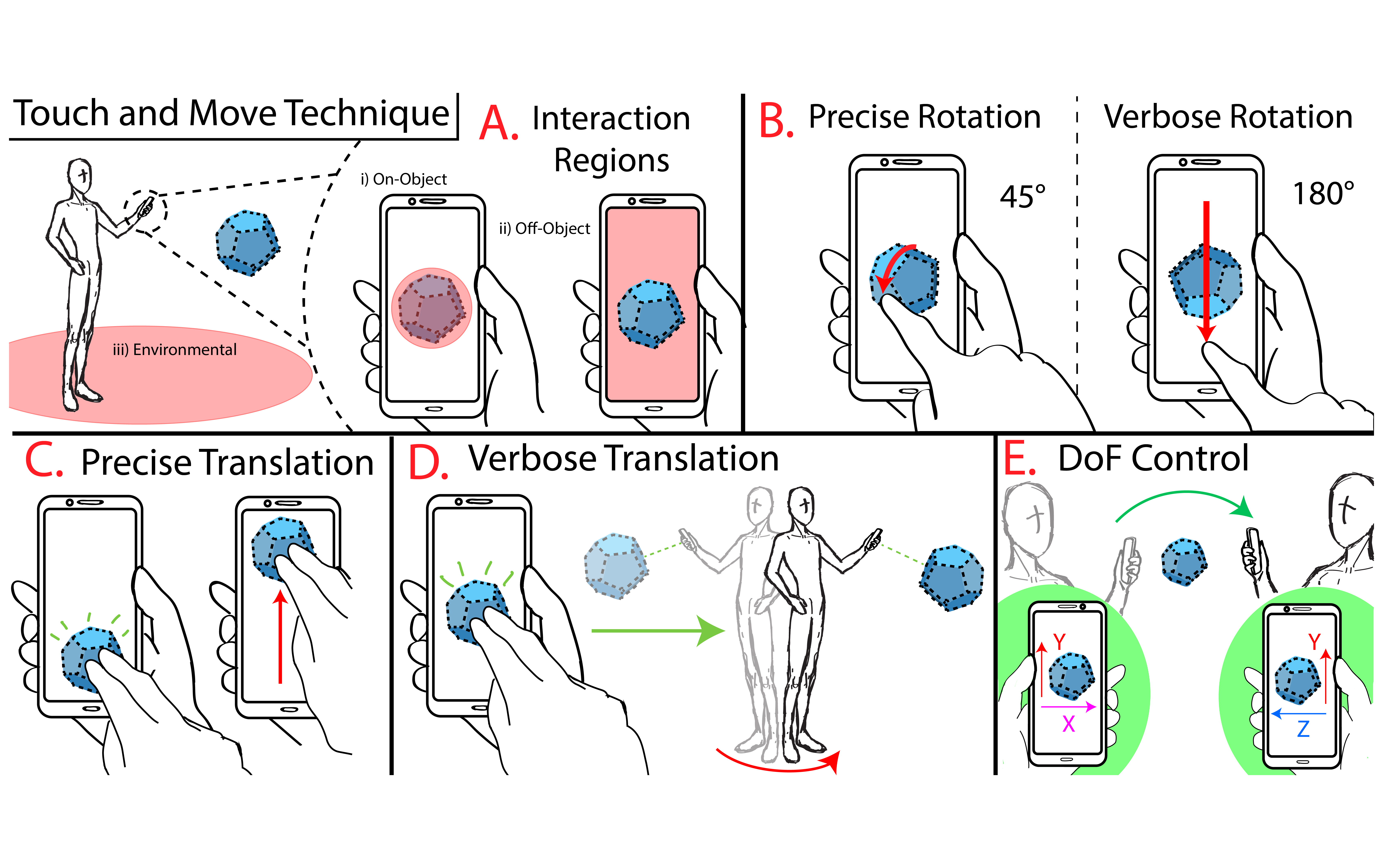

Recent advancements in mobile and AR technology can facilitate powerful and practical solutions for six degrees of freedom (6DOF) manipulation of 3D objects on mobile devices. However, existing 6DOF manipulation research typically focuses on surface gestures, relying on widgets for modal interaction to segment manipulations and degrees of freedom at the cost of efficiency and intuitiveness. In this paper, we explore a combination of surface and motion gestures to present an implicit modal interaction method for 6DOF manipulation of 3D objects in Mobile Augmented Reality (MAR). We conducted a guessability study that focused on key object manipulations, resulting in a set of user-defined motion and surface gestures. Our results indicate that user-defined gestures both have reasonable degrees of agreement whilst also being easy to use. Additionally, we present a prototype system that makes use of a consensus set of gestures that leverage user mobility for manipulating virtual objects in MAR.

Mobile HCI'22 Poster

- Citation: Daniel Harris, Dominic Potts, and Steven Houben. 2022. User-Elicited Surface and Motion Gestures for Object Manipulation in Mobile Augmented Reality. In Adjunct Publication of the 24th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI '22). Association for Computing Machinery, New York, NY, USA, Article 13, 1–6. https://doi.org/10.1145/3528575.3551443 View Paper View Poster